If you are like me and spend a lot of time looking at assembly language output from compilers, this article may not provide much new information. If not, however, you will hopefully find it interesting.

The thing I want to show is how seemingly lengthy and complex C++ code can be compiled into very compact machine code.

The C++ code

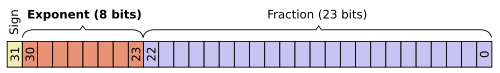

The C++ code snippet that we will compile defines two public functions, get_exponent() and set_exponent(), that extract and manipulate the exponent part of an IEEE 754 single-precision floating-point number.

In short, what we need to do is:

- Convert between floating-point and integer types, using type punning, in order to access and manipulate the exponent bits of the IEEE 754 binary representation. We do this with a custom template function, raw_cast().

- Shift and mask the binary representation of the floating-point value, as to extract/insert the exponent bits from/to the correct location in the integer word.

- Note that the type punning part is implemented by calling the standard std::memcpy() function, which is the correct way of doing that (without being subject to undefined behavior).

This is what the C++ code looks like:

#include <cstdint>

#include <cstring>

namespace {

// Type punning cast.

template <typename T2, typename T1>

T2 raw_cast(const T1 x)

{

static_assert(sizeof(T1) == sizeof(T2));

// One valid way of doing a type punning cast in

// C/C++ is to use memcpy().

T2 y;

std::memcpy(&y, &x, sizeof(T2));

return y;

}

} // namespace

// Extract the exponent from an IEEE 754 binary32

// floating-point value.

uint32_t get_exponent(float x)

{

auto xi = raw_cast<uint32_t>(x);

// Extract the 8-bit exponent (bits 23..30).

auto exp = (xi >> 23) & 0xffU;

return exp;

}

// Replace the exponent of an IEEE 754 binary32

// floating-point value.

float set_exponent(float x, uint32_t exp)

{

auto xi = raw_cast<uint32_t>(x);

// Insert the 8-bit exponent into bits 23..30.

const auto MASK = 0x7f800000U;

auto yi = (xi & ~MASK) | ((exp << 23) & MASK);

auto y = raw_cast<float>(yi);

return y;

}As an exercise, try to figure out what the resulting (compiled) assembly language code would look like (i.e. roughly what CPU instructions would be used to implement the above code).

At first glance it looks like we would need to do a number of logical and shift operations in each function, along with inlined copies of the raw_cast() function, and, ugh, calls to the standard C library function memcpy() too, which probably requires storing the floating-point and integer values on the stack or something…

But let’s see what actually happens.

The assembly language code

I used GCC 12 (current trunk), and compiled the C++ code for the MRISC32 instruction set, using optimization level -O2.

This is the assembly language code that we get:

get_exponent:

ebfu r1, r1, #<23:8> ; Extract bit field (unsigned)

ret ; Return

set_exponent:

ibf r1, r2, #<23:8> ; Insert bit field

ret ; Return

Just to be clear: What we are looking at here is the complete and correct assembly language implementation of the C++ code above, and each function consists of only two machine code instructions each (including the mandatory return-from-subroutine instruction, ret).

For reference, this means that the execution time for each function is in the order of a single CPU clock cycle (!).

That’s… I mean… Wow!

But, how?

(Note: Function arguments are passed in registers r1, r2, …, and the result value is returned in register r1. For more detailed information feel free to read the MRISC32 Instruction Set Manual.)

C++ compiler cleverness

Most of the magic comes from all of the clever tricks that have been built into the GCC compiler stack over the years. Even the fairly simple and immature MRISC32 back end (“machine description” in GCC terms) benefits from most of the optimization and code transformation tricks that the compiler knows about.

For instance:

- The raw_cast() template function, having internal linkage, will be inlined and no separate code will be generated for it.

- The compiler recognizes the call to memcpy(), and since the copy length argument is constant the compiler replaces the call with an optimized code sequence. In this case it will just be a simple register move operation – which for the MRISC32, with its combined integer & floating-point register file, will actually be eliminated completely! Zero CPU instructions needed!

- The mask-and-shift operations are recognized by the compiler as being bit field operations, and appropriate CPU instructions are used, if present. Since the MRISC32 ISA has bit field instructions (e.g. ebfu and ibf), those are used.

Regarding other architectures, the compiler generates good code for ARMv8 too (though it needs a few extra moves between floating-point and integer registers), but for CPU:s without bit field instructions the compiler has to generate longer sequences (e.g. x86-64 and RISC-V). Clang generates similar results, by the way.

The really neat thing here is that you get to benefit from all of these optimization tricks, regardless of whether you are a C++ developer or if you are adding a new back end to GCC.

Understanding compiler optimizations

Of course, this is not a representative example of C++ code in general. It is very deliberately and specifically crafted to prove a point. In fact, with a few minor modifications the compiler might produce an order of magnitude more machine code instructions (e.g. if the raw_cast() function was put in another compilation unit, triggering external linkage).

The thing that I wanted to point out is that modern compilers are very good at optimizing code, if you provide it with information and constructs that it can work with.

In the old days you would often have to write your C/C++ code in a way that was very similar to the desired machine code if you wanted good performance, since the compiler would do a much more literal translation from C/C++ to machine code. Duff’s device is an example of this (and these days you should usually not use such explicit constructs).

With a modern compiler, it is instead important to:

- Make as much information as possible available to the compiler at compile time (rather than at run time).

- Make sure that performance critical functions are inlined (function calls add considerable overhead).

- Ensure that values and expressions can be evaluated at compile time.

- Put short function definitions in include files.

- Prefer local linkage (e.g. use anonymous namespaces).

- Etc.

- Learn the strengths and limitations of your target architecture.

- Examine the compiler output to see if you get what you want.

- As usual, benchmark and profile your code to identify bottlenecks (perf top is a personal favorite).

Some tips

Make it a habit to inspect the compiler output every once in a while, especially for the performance critical parts of your code.

Perhaps the easiest way to inspect the code generation for small code snippets is to use Compiler Explorer. I use it all the time. It is especially useful if you target multiple compilers (GCC, Clang, MSVC, …) and/or CPU architectures (x86, ARM, PowerPC, RISC-V, …).

Another very useful tool is objdump. It comes in handy when you want to inspect the binaries that you are compiling locally. I usually run:

objdump -drC executable-or-library-or-object-file | less…and then search for the name of the function of interest (search with “/regex” in less, where regex is the name of the function, for instance).

One thought on “I want to show a thing (C++ to machine code)”